The Smart Way to Spot and Fix a Biased AI Decision

Biased AI Decision Introduction

Artificial Intelligence is everywhere, helping us get jobs, qualify for loans, get medical evaluations, and even determine parole outcomes. But here’s the uncomfortable truth:

AI can be biased.

And the scariest part? Most people don’t know how to spot it.

From facial recognition tech that misidentifies people of color to hiring algorithms that silently exclude qualified candidates, AI bias isn’t just possible, it’s already happening. Because AI decisions feel fast, neutral, and “objective,” we rarely stop to question them.

This guide will show you how to spot biased AI, and how to ask smarter questions before you trust its outputs.

Table of Contents

What Does “AI Bias” Actually Mean?

Bias in AI occurs when a system systematically favors or discriminates against certain groups. This doesn’t come from the machine itself, it comes from:

- The data it was trained on

- The design choices made by developers

- The objectives it was optimized to fulfill

Here’s what that looks like in real life:

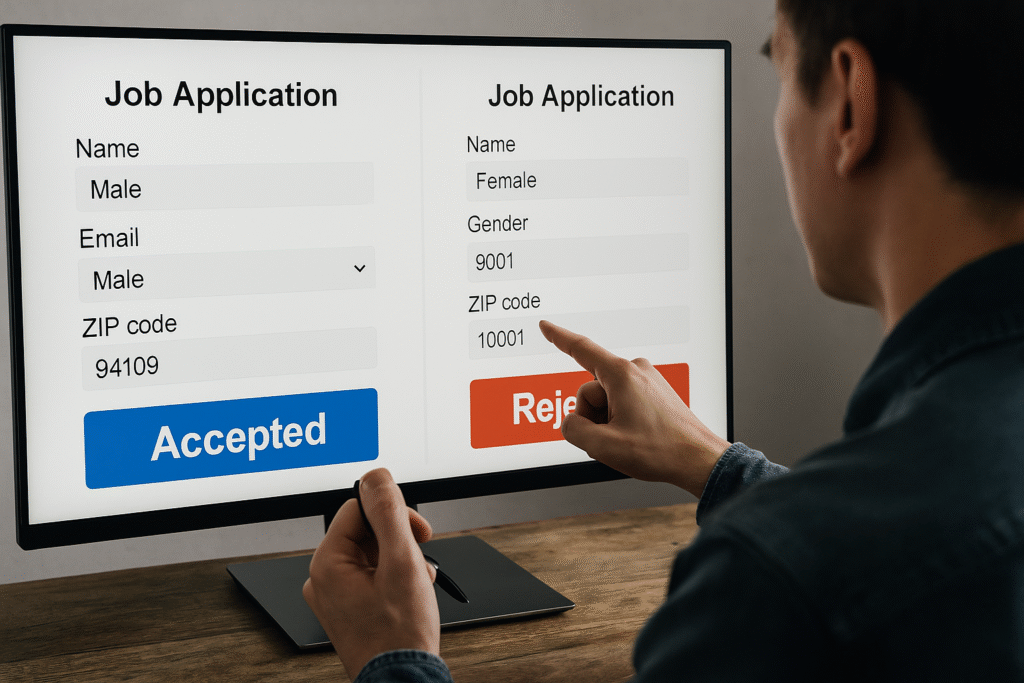

- A résumé filter that silently ranks male candidates higher

- Predictive policing software that targets certain neighborhoods

- Credit scoring systems that penalize you based on zip code

- Health models that under-diagnose illnesses in non-white patients

Bias in AI leads to real-world harm. But because the process is invisible, many never realize it’s happening.

7 Warning Signs That AI Might Be Biased

How can you tell if an AI system is behaving unfairly? Look for these red flags:

- The outcomes consistently favor one group over others.

If a tool keeps rejecting similar applicants from a particular demographic, something’s off. - There’s no transparency about how the AI works.

“It’s a black box” isn’t good enough, especially if the decision affects your future. - The training data isn’t representative.

If the data only reflects one gender, race, or economic group, the model will generalize poorly. - Human oversight is completely removed.

Systems that automate decision-making with no human review are especially risky. - There’s no appeals process.

If you’re denied credit or a job and can’t contest it, the system is too closed. - Sensitive attributes are indirectly factored in.

For instance, using ZIP codes can serve as a proxy for race, leading to discrimination. - Performance drops significantly on minority groups.

If an AI works well for one group and fails for another, it’s not ready for deployment.

Real-World Examples of Biased AI

1. COMPAS Recidivism Prediction Tool (US Courts)

This tool predicted the likelihood of someone reoffending. Black defendants were nearly twice as likely to be incorrectly labeled high-risk compared to white defendants, even when they hadn’t committed new crimes.

2. Amazon’s AI Hiring Tool

Amazon scrapped an internal hiring AI after discovering it penalized resumes that included terms like “women’s chess club.” Why? It had been trained on 10 years of male-dominated hiring data.

3. Healthcare Algorithms

A 2019 study found that an AI used to determine patient eligibility for extra care was less likely to recommend Black patients, not because they were healthier, but because the model used past healthcare spending as a proxy for need. Since Black patients historically receive less care, the model assumed they needed less.

Questions to Ask About Any AI System

When you encounter an AI decision, whether it’s in HR, health, finance, or legal, start by asking:

- What data was this trained on?

Was it balanced? Was it audited? - Are sensitive features (race, gender, disability) accounted for or ignored?

Ignoring them isn’t always good, sometimes you need them to correct for historical bias. - Was the model tested for fairness across demographics?

Look for performance disparities. - Is the decision explainable?

Can someone explain why you were denied or approved? - Is there a human in the loop?

Who can override or question the AI’s judgment? - Can I appeal this decision?

Systems without recourse are inherently unfair.

How to Spot Bias as a Non-Technical User

You don’t need to be a developer to detect bias. Here’s how:

Compare Outcomes

Does the AI consistently reject one type of profile? For instance, if a resume filter excludes all career-switchers, that’s a red flag.

Ask for Documentation

Ethical AI systems should come with documentation (a.k.a. “model cards”) that describe how they were trained and tested.

Look for Human Oversight

Any system affecting your life, job, housing, medical care, should be supervised by a real human.

Try Counterfactual Testing

Change one variable and resubmit (e.g., switch gender or ZIP code). Does the result change?

Speak Up

Raise concerns if a decision feels unfair. You’re not being paranoid, you’re being smart.

Tools and Organizations Fighting AI Bias

Want to investigate or improve fairness? These tools and watchdogs are leading the charge:

- AI Fairness 360 (IBM), Open-source toolkit to detect and mitigate bias

- Google’s What-If Tool, A visual tool for model inspection

- The Algorithmic Justice League, Advocates for equitable AI

- AI Now Institute, Researches social implications of AI

- OpenAI’s Model Cards, Encourage transparency and ethical AI deployment

These organizations help ensure AI serves everyone, not just the majority.

How to Advocate for Ethical AI

Bias in AI is a social issue, not just a technical one. Everyone has a role to play.

Learn the Basics

You don’t need to code to understand how AI works. Just ask smart questions.

Talk About It

Bring up AI ethics in work meetings, schools, and social circles. Awareness is the first step.

Demand Audits

Organizations should perform bias audits just like financial audits, especially in high-impact systems.

Support Regulation

Push for legislation that requires AI transparency, explainability, and fairness testing.

Promote Inclusive Development Teams

Diverse teams build better, less biased tools. Period.

Don’t Let “Automation” Be an Excuse

Challenge the idea that AI decisions are always more accurate or fair than humans.

Final Thoughts: Trust, But Verify

AI has incredible potential, but it’s not immune to the biases of the world we live in.

So next time a system tells you:

- “You’re not eligible.”

- “You didn’t qualify.”

- “You’re high risk.”

Pause. Ask:

“What was this trained on?”

“What does it optimize for?”

“Who does this system work best for and who does it fail?”

Because biased decisions made by machines are still biased decisions.

And we all deserve a world and technology that treats us fairly.