7 Shocking AI Developments Europe Can’t Ignore

AI Developments Introduction: Europe’s AI Reckoning

Europe stands at a pivotal crossroads in the evolution of technology. No longer confined to research labs or tech startup dreams, artificial intelligence has become a foundational force, quietly but rapidly transforming how societies function. In 2025, AI is not just powering search engines and social feeds — it’s reshaping healthcare decisions, automating government services, influencing hiring choices, and determining who gets access to resources and opportunities. In other words, AI is no longer in the background — it’s everywhere.

But with this rise comes an unsettling paradox: the more powerful and pervasive AI becomes, the less visible and less understood it is by the public. What was once discussed in theoretical terms — algorithms, neural networks, machine learning — is now embedded in everyday life, often without consent or comprehension from the people it affects.

And this matters, because innovation without oversight is no longer innovation — it’s disruption. While many celebrate AI’s ability to improve efficiency, optimize logistics, or accelerate discovery, others are sounding alarms over how these systems are being used — and misused — across Europe.

Take, for example, the quiet spread of biometric surveillance in urban areas, the growing use of AI to make critical healthcare decisions behind closed digital doors, or the controversial deployment of emotion-detecting software in classrooms and courtrooms. These are not scenarios from some speculative tech dystopia. These are real, present-day deployments — and they are growing without clear guardrails.

Meanwhile, regulators race to catch up. The European Union, long known for its progressive stance on digital rights, has introduced the EU AI Act, aiming to become the global standard-bearer for trustworthy AI. Yet, as this article will explore, even that groundbreaking legislation risks being undermined by loopholes, lobbying, and lagging enforcement.

In this moment of reckoning, we must ask: Is Europe leading the world toward ethical, human-centered AI — or quietly enabling systems that erode trust, deepen inequality, and normalize surveillance?

This article breaks down seven shocking developments in the European AI landscape that cannot be ignored. They are urgent. They are unfolding now. And whether you’re a policymaker shaping regulation, a technologist building solutions, or simply a citizen interacting with AI in daily life, understanding these trends is no longer optional — it’s essential.

Because the future of AI in Europe isn’t just being coded. It’s being decided.

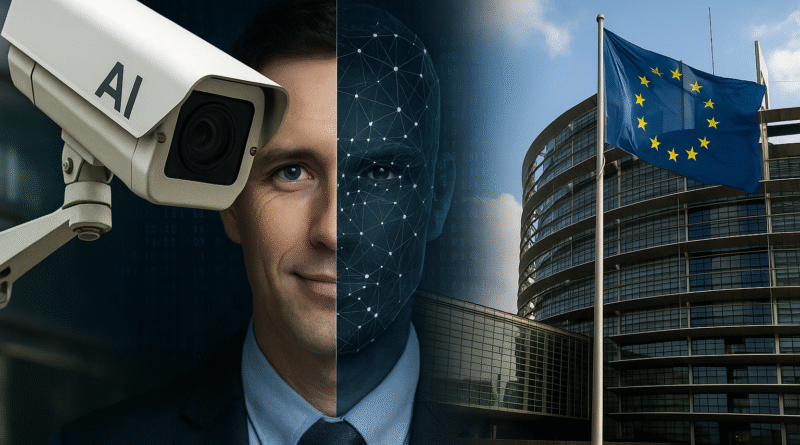

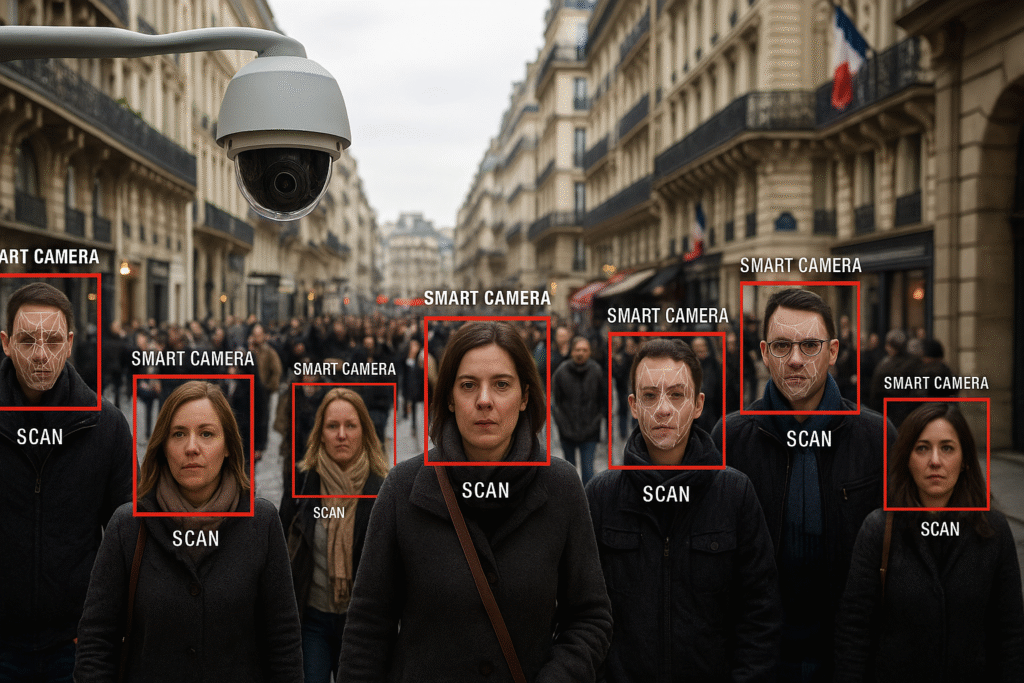

1. AI Surveillance Is Quietly Expanding Across Cities

In multiple European cities, from Paris to Warsaw, governments have quietly rolled out AI-powered surveillance systems. These tools include facial recognition, predictive policing models, and smart city sensors that track movement, behavior, and biometric patterns.

Despite privacy concerns and pushback from civil liberties groups, these systems are being deployed under the banner of safety and efficiency. The public often doesn’t realize just how much data is being collected, or how little control they have over it.

The European Data Protection Board has flagged this as a critical issue, warning of a potential breach of GDPR and erosion of public trust.

2. The EU AI Act May Fail to Rein in Big Tech

The much-anticipated EU AI Act was expected to set a global gold standard for ethical AI use. But as of mid-2025, it faces criticism for being too watered down to truly hold Big Tech accountable.

Lobbying pressure has diluted key provisions, allowing companies to self-classify the risk levels of their AI systems. This opens the door to regulatory loopholes and minimal enforcement.

Experts warn that unless amended, the AI Act may become more symbolic than impactful, offering the illusion of oversight while letting powerful actors set their own rules.

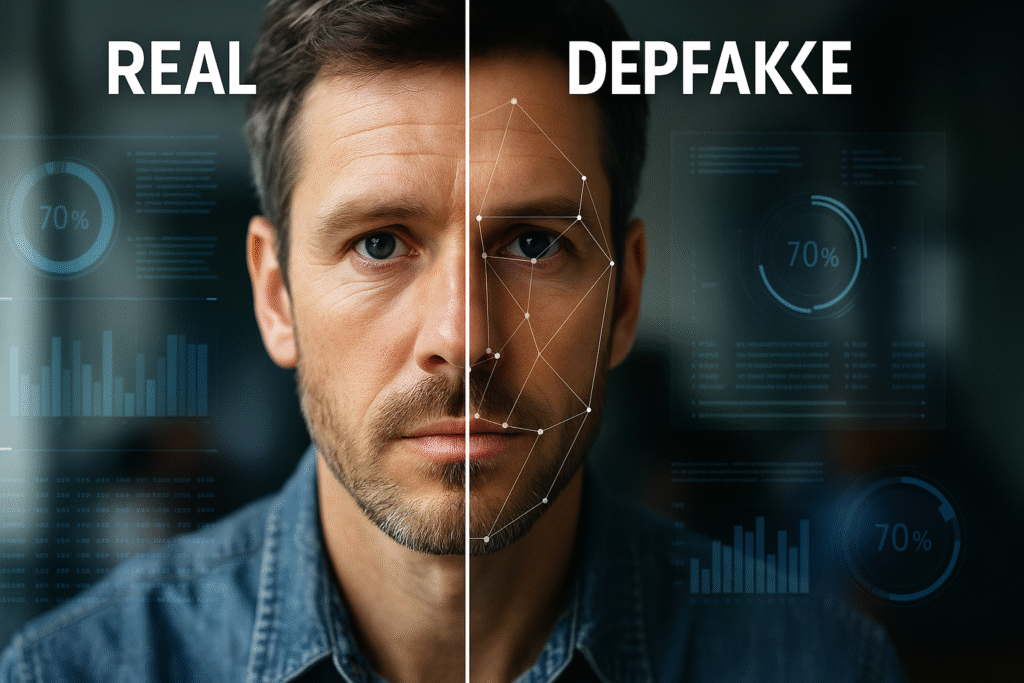

3. Deepfake Scandals Are Spreading Faster Than Laws

Deepfakes aren’t just about fake celebrity videos anymore, they’re impacting elections, legal cases, and public safety.

In 2025, several European countries experienced deepfake-driven misinformation campaigns that swayed local elections and defamed political candidates. Law enforcement struggles to keep up, and courts are overwhelmed with cases involving digital impersonation.

Current laws are ill-equipped to respond to AI-generated deception, especially when the line between satire and sabotage is increasingly blurry.

4. AI Is Disrupting Job Markets Without Replacement Plans

From manufacturing in Germany to legal review in the Netherlands, AI systems are replacing human labor at scale. And while productivity is rising, the human cost is being ignored.

Many EU governments still lack cohesive strategies to reskill displaced workers. Transition funding is slow, and tech training programs are underfunded.

This isn’t just an economic issue, it’s a societal one. Without intervention, AI could widen inequality and trigger long-term unemployment in vulnerable sectors.

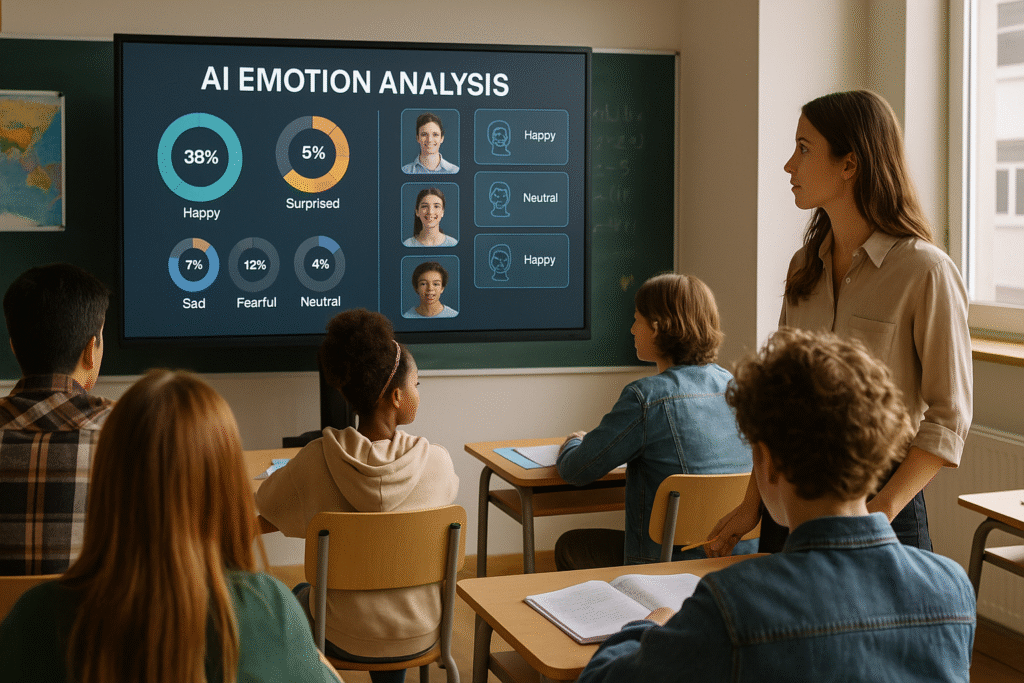

5. Emotion Recognition AI Is Entering Classrooms

In a controversial move, some schools in France and Spain have begun using AI tools that claim to analyze student emotions through facial expressions and voice patterns. The goal? To improve engagement and detect distress.

But critics argue this amounts to surveillance of children, and it’s unclear how accurate or ethical these tools are. Some studies show these systems reflect cultural and racial biases, misinterpreting emotions based on flawed training data.

Parents and educators are raising alarms, and regulatory clarity is urgently needed.

6. Health AI Tools Are Being Deployed Without Transparency

Hospitals across Europe are increasingly adopting AI for diagnostics, triage, and treatment planning. While these tools can save lives, many are being rolled out with little public awareness or accountability.

In the UK and Germany, several AI tools in use have proprietary algorithms, meaning doctors and patients don’t fully understand how decisions are made. When AI makes a misdiagnosis, it’s unclear who is responsible.

Without open-source standards and independent audits, health AI remains a black box, one with life-or-death implications.

7. AI in Migration Control Is Raising Human Rights Flags

AI tools are now being used to process asylum applications, screen travelers, and monitor refugee camps. EU border agencies rely on predictive analytics to flag “high-risk” individuals based on data-driven profiling.

But this approach risks automating discrimination and dehumanizing already vulnerable populations. In some cases, people have been denied entry or benefits based on algorithmic assessments they couldn’t challenge or understand.

Human rights groups across Europe are calling for a moratorium on these tools until ethical frameworks catch up.

Conclusion: The Urgency of Transparency and Ethics

Artificial Intelligence is not inherently good or bad, it’s a tool, shaped entirely by the intentions, designs, and systems created by human beings. Like any powerful technology, its outcomes depend on how thoughtfully it is developed, how transparently it is implemented, and how responsibly it is governed. And yet, in the current AI landscape across Europe, we’re seeing signs of a disturbing trajectory: one marked by haste over caution, opacity over clarity, and corporate convenience over public interest.

These seven developments from unchecked surveillance and emotion recognition in classrooms to deepfakes influencing democracy and health algorithms making opaque decisions aren’t fringe experiments. They are mainstream deployments, already embedded in public systems and social institutions. That makes them all the more urgent to address, because what’s at stake is not just technological progress, but the integrity of European democratic values themselves: privacy, dignity, accountability, and equity.

The European Union has long prided itself on being a leader in ethical regulation from GDPR to digital competition policy. But when it comes to AI, the same spirit must now evolve into action that is bold, enforceable, and forward-thinking. That means moving beyond symbolic gestures and press releases. It means funding and empowering independent oversight bodies with real authority to audit AI systems. It means creating clear channels for citizens to contest automated decisions, especially in life-altering areas like healthcare, hiring, education, and immigration.

Crucially, it also means investing in AI literacy at scale. Because transparency isn’t just about companies revealing their algorithms it’s about the public being equipped to understand what’s being revealed. When people understand how AI works, they’re better able to ask critical questions, demand accountability, and participate meaningfully in shaping the technology that governs their lives.

This is a pivotal moment. Europe still has the opportunity to set the global standard for human-centered, transparent, and ethical AI development. The world is watching not just to see if the EU can regulate effectively, but to see if it can model what it means to innovate without exploiting, to lead with integrity instead of urgency, and to defend human rights in the age of intelligent machines.

Because if we fail to act now, if we allow these trends to continue unchecked, the future of AI in Europe won’t be defined by innovation. It will be defined by exploitation, exclusion, and a widening gap between those who control AI and those who are controlled by it.

The time to intervene is not “someday.” It’s now. And the responsibility belongs not only to legislators and engineers, but to all of us as citizens, as thinkers, and as stewards of a shared digital future.