6 Brilliant Lawsuits Against AI Art Generators

Introduction: When Art Fights Back

We used to think the battle between man and machine would happen in factories, in code, maybe in courtrooms filled with technical jargon and patent lawyers.

But in 2025, that battle is happening on digital canvases.

AI art generators like Midjourney, Stable Diffusion, and DALL·E have taken the creative world by storm, enabling anyone to type a prompt and produce gallery-worthy images in seconds. At first, it felt like magic. The democratization of art. The rise of new aesthetics. The age of infinite imagination.

But that magic has a cost.

Because behind the beautifully rendered portraits, dreamscapes, and fantasy scenes lies a deeper question, one that’s sparking lawsuits from the most powerful names in media and art:

“Who owns the image when the machine was trained on mine?”

In 2023 and 2024, a wave of litigation began to emerge. Artists, illustrators, photographers, and now, entertainment giants like Disney and Universal, are pushing back against the use of copyrighted material to train AI models. These aren’t fringe complaints. These are full-blown legal offensives.

And whether you’re a digital artist, a technologist, or just someone who believes in fair creative credit, these lawsuits matter. They’re redefining the meaning of authorship, ownership, and creative labor in the AI era.

This article explores six of the most brilliant and consequential lawsuits currently challenging AI art generators, and what they mean for the future of creativity, intellectual property, and machine learning itself.

Because the line between inspiration and exploitation is no longer philosophical. It’s legal. And it’s being drawn right now.

Why It Matters: The Future of Art Isn’t Just Creative, It’s Legal

At first, AI art felt liberating. It gave non-artists a voice. It pushed professional illustrators to explore new frontiers. It blurred the line between “maker” and “muse.”

But as these lawsuits reveal, it also did something darker: it consumed without consent.

Artists didn’t just feel copied, they felt erased. Their portfolios turned into raw material. Their styles reduced to sliders. Their names replaced by prompt tokens.

What’s at stake isn’t just royalties or credit. It’s the right to own your visual voice in a world where machines can replicate it faster, cheaper, and without asking.

These lawsuits are brilliant not because they’re petty or reactionary. They’re brilliant because they:

- Expose the blind spots in current IP law.

- Force tech companies to confront ethical debt.

- Remind the world that behind every dataset is a real human story.

And win or lose, these cases are already reshaping the landscape, pushing us toward an AI future that’s not just powerful, but principled.

1. The Getty Images vs. Stability AI Case

Filed: February 2023, U.K. High Court & U.S. District Court

Core Issue: Copyright infringement by training Stable Diffusion on millions of Getty images.

Why it matters: Getty’s database is one of the most commercially licensed in the world. They allege Stability AI scraped and used over 12 million of their images, complete with watermarks, to train a model that now competes directly with Getty’s own services.

Getty’s Argument:

- Unauthorized use of protected content for commercial AI development.

- Degradation of their brand through AI outputs that replicate watermarked visuals.

- Violation of licensing agreements and unfair competition.

This case is the canary in the coal mine. It tests whether training data can be considered “fair use” when the output is monetized, and whether watermark removal by a model counts as willful erasure of IP identity.

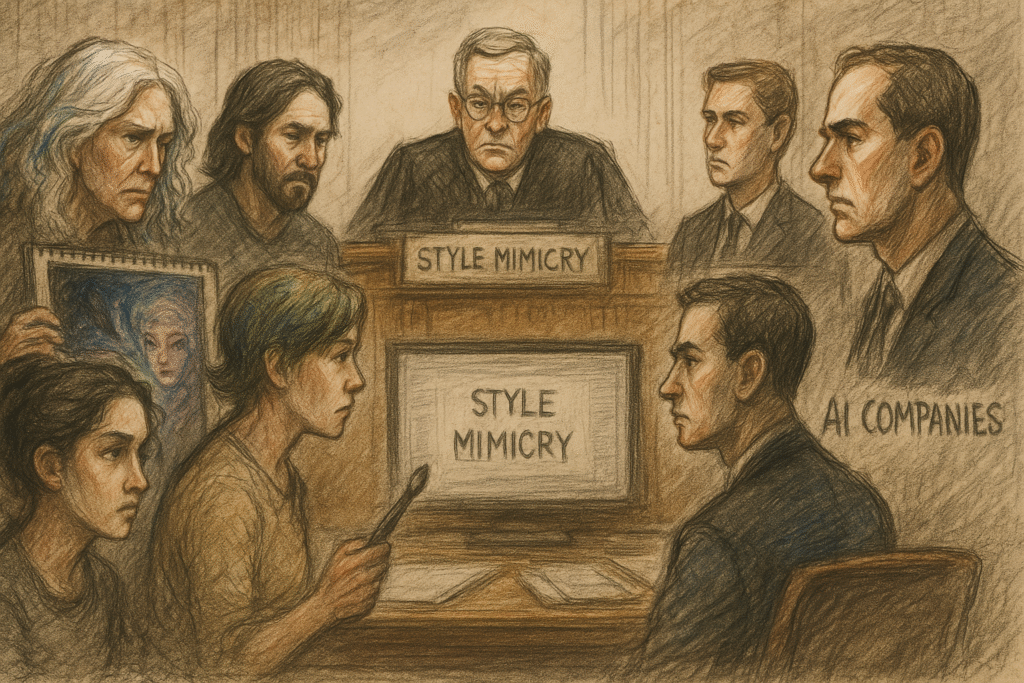

2. The Andersen v. Stability AI, Midjourney & DeviantArt Class Action

Filed: January 2023, U.S. District Court for the Northern District of California

Plaintiffs: Three artists: Sarah Andersen (comic artist), Kelly McKernan, and Karla Ortiz.

Target: Stable Diffusion’s ability to generate artwork in their unique styles.

Why it matters: This case claims that AI generators can produce new works that closely resemble the original artists’ styles, effectively diluting their brand, devaluing their commissions, and infringing on style as intellectual property.

Legal Innovation:

- Seeks to establish “style mimicry” as a form of protected artistic expression.

- Alleges that mass scraping of DeviantArt portfolios was non-consensual.

This case may be the first to argue that an artist’s “style”, not just their images, is protectable. If successful, it could change how AI models are trained, forcing explicit opt-ins or licensing deals for individual aesthetics.

3. Disney and Universal’s Legal Warnings Against Midjourney

Timeline: Quiet legal warnings issued in 2024

Stakeholders: Disney, Universal Pictures

Issue: Midjourney users generating Disney- and Universal-style imagery, including characters and scenes.

Why it matters: These media giants have some of the most recognizable (and heavily protected) visual assets in history. They’re not waiting for the courts to decide, they’ve already sent legal warnings demanding the removal of prompts and models that replicate their IPs.

Midjourney’s Response:

- Banned certain prompt phrases related to copyrighted franchises.

- Implemented moderation tools to reduce IP infringement.

This isn’t about protecting Mickey Mouse from “fan art.” It’s about control. Disney is setting a precedent that AI art models can’t be trained on, or used to reproduce, proprietary franchises without consent, even indirectly. The era of AI-generated “Disneyfied” universes may be short-lived.

4. New York Times vs. OpenAI & Microsoft

Filed: December 2023

Claim: That ChatGPT was trained on, and can reproduce, large parts of NYT content.

Why it matters: While this case targets text generation, it sets crucial precedent for how creative content (text or visual) scraped from the internet may or may not be legally reused.

Implications for AI Art:

- If text-based models can’t use copyrighted training data without permission, art-based models may face the same outcome.

- The distinction between “transformative” and “derivative” is under the microscope.

This case is a domino. If the NYT wins, visual media lawsuits will lean on that precedent to force Midjourney, DALL·E, and others to rebuild their datasets with licensed material only.

5. German Cartoonist sues LAION Dataset Contributors

Filed: Mid-2024

Plaintiff: Anonymized due to ongoing court gag order

Target: LAION-5B, an open-source image-text dataset used by Stable Diffusion.

Why it matters: The suit argues that a dataset openly hosting millions of copyrighted images, including artwork never meant for training, is a violation of EU data and copyright laws.

Europe’s Edge:

- Stronger digital rights enforcement under GDPR and the upcoming EU AI Act.

- Higher expectation of consent and purpose-limited data use.

This isn’t just about one cartoonist. It’s about Europe’s stricter privacy and copyright regime finally flexing against open-source datasets, which could fracture global AI development along legal borders.

6. Japan’s Manga Artists Push for Licensing Model

Date: April 2025

Initiative: A legal coalition of manga artists and publishers seeking legislative support.

Focus: Midjourney models being used to replicate popular manga drawing styles, and AI-generated doujinshi.

Why it matters: Japan’s manga industry thrives on unique visual identity. Artists claim their brushwork, pacing, and narrative framing are being fed to models without consent.

Legislative Demand:

- Require model registries to disclose training data.

- Enforce opt-out options retroactively.

While this hasn’t become a full-blown lawsuit (yet), it shows the shift from court battles to policy pushes. If Japan codifies these demands, other creator-centric cultures (Korea, France) may follow suit.

FAQ: AI Art Lawsuits in 2025

Q1: Why are so many lawsuits focused on training data?

A1: Because most AI models are trained on vast datasets scraped from the internet, often without permission. Plaintiffs argue that this violates copyright law.

Q2: Can AI art be copyrighted?

A2: Under current U.S. and EU law, works created entirely by AI are not eligible for copyright, but the lines get murky when prompts and human curation are involved.

Q3: How are platforms like Midjourney responding?

A3: Some have implemented prompt restrictions, improved moderation, and begun negotiating licenses with stock image platforms.

Q4: Will this kill AI art?

A4: Not likely, but it may change how models are trained and how outputs are used commercially.

Final Thoughts: Between Innovation and Infringement

Every creative revolution brings friction. The printing press. Photography. Sampling in hip-hop. And now, AI art.

But friction isn’t failure. It’s the process of figuring out where the line should be drawn.

These lawsuits aren’t trying to kill AI. They’re trying to remind us that progress without principles creates tools that steal as much as they create.

And as someone who believes deeply in the future of human-machine collaboration, I’ll say this:

“If we want AI to honor human creativity, we have to start by protecting the humans behind the pixels.”

It’s not about slowing down the future. It’s about building a future where everyone gets credit for what they’ve made, even if the machine is holding the brush.